Applicant

Prof. Peter Uhrig

Department of English and American Studies

Friedrich-Alexander-Universität Erlangen-Nürnberg

Project Summary

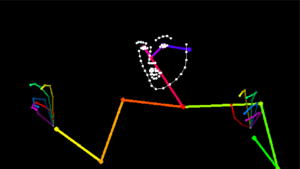

The detection of co-speech gesture in large-scale video data “in the wild”, i.e. in unrestricted media recordings is non-trivial, but has been massively improved with the introduction of robust deep learning based pose estimation software such as OpenPose (Cao et al. 2018). The image below shows the annotations created by the software on an image by Donald Trump performing a two-handed gesture, so-called air quotes.

Statistics as to the visibility of hands on screen were produced in order to determine which shows are more promising for gesture research and which ones are not. The application of the SPUDNIG (Ripperda et al. 2020) approach to unrestricted media data is not possible, but a more robust approach based on scene detection and active speaker identification has now been finished in the subsequent DFG/AHRC-funded project “World Futures”, which builds on the results from this KONWIHR project.

Extensive benchmarking and optimization was carried out in the project, which allowed us to build a set of portable Singularity containers that can make optimal use of the various generations of Nvidia GPUs, including the latest additions to FAU’s GPU zoo, the A40 and A100 cards in the Alex cluster. The optimal target system for our purposes is the A40, because it is much more cost-effective than the A100 but equally fast on the inference task.

Overall, the improved HPC processes created for this project have benefitted multiple research projects and have been used in several publications, showing the great impact of KONWIHR funding can have in the preparation of larger-scale grant applications.

Publications

- Uhrig, Peter. 2022. Large-Scale Multimodal Corpus Linguistics – The Big Data Turn. Habilitation Thesis, FAU Erlangen-Nürnberg.

- Elnaz Shafaei-Bajestan/Masoumeh Moradipour-Tari/Peter Uhrig/Harald Baayen (2021): “LDL-AURIS: Error-driven Learning in Modeling Spoken Word Recognition.” Language, Cognition and Neuroscience.

- Yash Khasbage/Daniel Alcaraz Carrión/Jennifer Hinnell/Frankie Robertson/Karan Singla/Peter Uhrig/Mark Turner (2022): “The Red Hen Anonymizer and the Red Hen Protocol for de-identifying audiovisual recordings.” Linguistics Vanguard.

- Peter Uhrig (2022): “Hand Gestures with Verbs of Throwing: Collostructions, Style and Metaphor.” In: Beate Hampe & Anja Binanzer (eds.). Yearbook of the German Association of Cognitive Linguistics. Berlin/Boston: De Gruyter.